When doing 2D graphics there are a few patterns you'll use all the time - points, rectangles, matrices, etc... To make 2D geometry and colors easy to work with, use

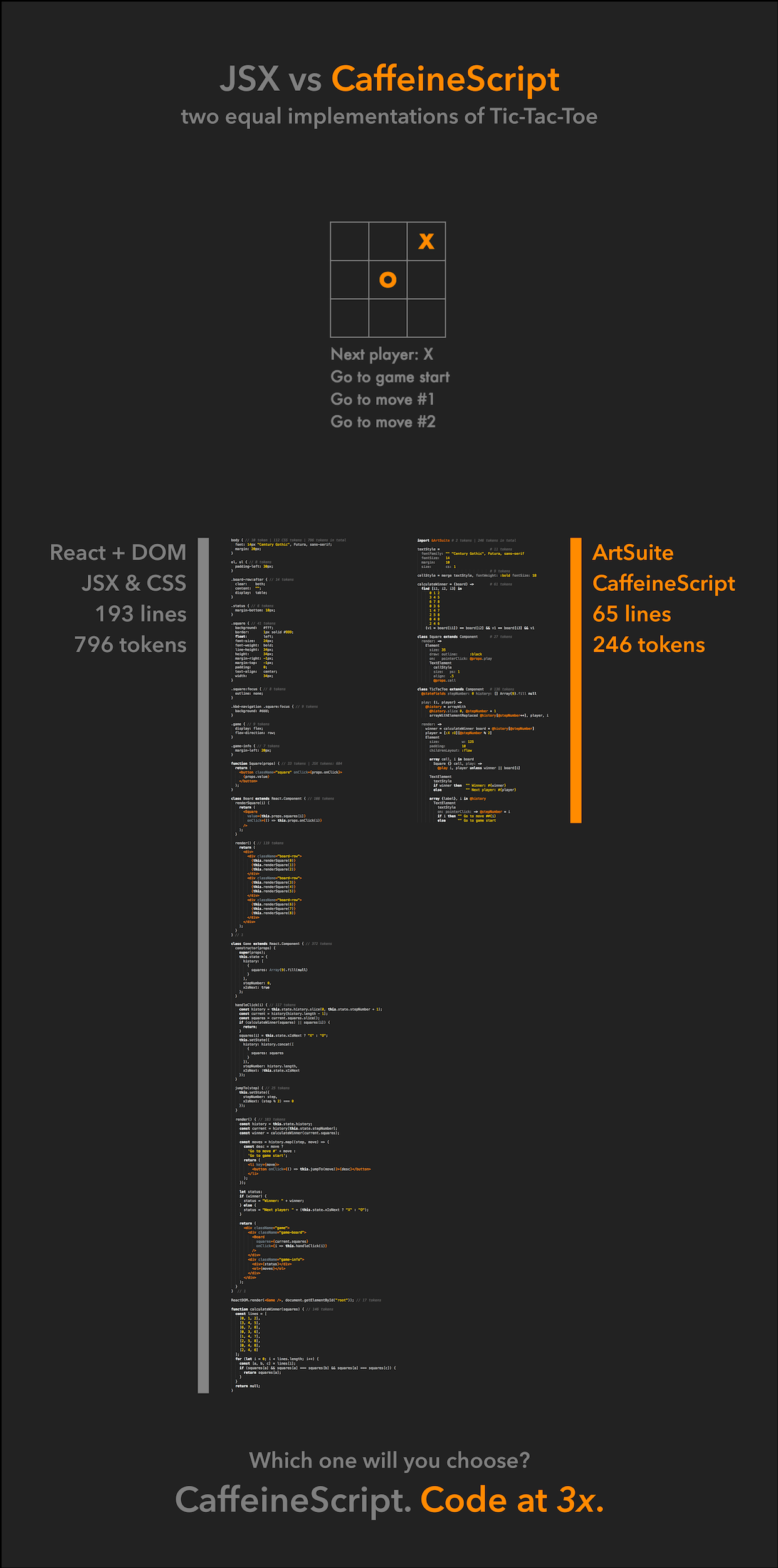

ArtAtomic. The atomic data-types include: Point, Color, Matrix, Rectangle and Perimeter. ArtAtomic provides the foundation for other ArtSuite libraries including ArtEngine, ArtText and ArtBitmap.

Quick Example

p1 = point 10, 20

offset = point 300, 400

area = rect 200, 200, 1000, 500

area.contains p1 # false

area.contains p1.add offset # true!

red = rgbColor #f00

blue = red.withHue 2/3

white = red.withSat 0

red2 = hslColor 0, 1, 1

red3 = hslColor 1, 1, 1

red2.eq red # true!

red3.eq red # true!

halfTransparentRed = rgbColor #f007

halfTransparentRed.eq red.withAlpha .5 # true!

What Does Atomic Mean?

These classes are designed to be used in a pure-functional way. Never modify their properties. Instead, use their methods to generate new objects.

HOWEVER, JavaScript performance requires minimizing the number of objects you create. As-such, there are some ways to end-run the pure-functional nature of ArtAtomic by re-using existing same-type objects. This should only be done in places where you can prove that there are no other references to the object you are reusing. In general, the way this works in ArtAtomic is some methods take an extra, optional argument called "into" which lets you specify where the result should be placed. If that argument is not provided, "into" is set to a new object. In either case, "into" is the value returned from these functions.

Color (primary props: r, g, b, a)

The Color class provides many tools for manipulating colors. It works in RGBA and HSLA color-spaces. It supports converting to and from many standard string-formats: "#FFF" and "rgb(255, 255, 255)" for starters.

One simple, cool example: rgbColor("#f00").withHue(2/3) == "#00f"

Point (primary props: x, y)

Points are the foundation of any geometry framework. These points are all 2D: x & y. This library lets you perform math and comparisons on points: point(1, 2).add(point(3,4)).average(). You can treat these points as vectors: dot and cross-products are: point1.dot(point2) or point1.cross(point2). Also, point2.magnitude will return the magnitude of a point.

Rectangle (primary props: x, y, w, h)

Rectangles specify their upper-left-hand corner and their width and height. Strictly speaking, the upper-left point is included in the rectangle and the lower-right point is not: rect(1,2,3,4).contains(point 1, 2) - true, but rect(1,2,3,4).contains(point 4, 6) is false.

Rectangle has many useful methods in addition to basic arithmetic and comparison including: union, intersection, cutout, nearestInsidePoint and more...

Matrix (primary props: tx, ty, sx, sy, shx, shy)

This 2D matrix library provides all the standard matrix operations: translate, scale, rotate, mul(tiply), invert and, most importantly, myMatrix.transform(myPoint) => myTransformedPoint.

Perimeter (primary props: left, right, top, bottom)

The most recent addition to ArtAtomic is Perimeter. This is primarily used to express margins and padding in ArtEngine. Currently there are only a few additional methods on top of the standard arithmetic and comparison operations.